DEPARTMENT OF COMPUTER SCIENCE

presents

(SCROLL DOWN)

for

GATEWAYS 14

featuring

Qu-Bits

The IT Magazine

INFOBAHN

From HOD's desk

Prof. Joy Paulose | HOD, Dept of Computer Science

Prof. Joy Paulose | HOD, Dept of Computer Science

Right from its inception, Christ university has believed in inculcating among its students a sense of belonging, as well as a drive to achieve nothing short of excellence. Students, be it from different parts of the country or those having crossed international borders, have been instilled with the values of social responsibility, moral uprightness and purity of thought. This in turn contributes towards the holistic development of an individual, resulting in christites making a mark in whatever they set out to achieve.

The department of computer science has always worked towards nurturing and moulding students with all the required skills and confidence to meet the challenges triggered by technological advancements. The enthusiasm, focus and a sense of purpose of the staff and students alike, have contributed to the department having achieved continued success over the years.

GATEWAYS has been the perfect opportunity for students of computer science field to test their strengths and improve on the weakness through a host of technical and non-technical events. Students get a chance to pit themselves against other participants from across the country which is vital as they get an insight into where they stand. We at Christ University constantly strive towards perfection and leave no stone unturned when it comes to giving students a chance to showcase their talents and test the limits of their potential.

As we march forward, we hope to attain the high set standards and continue scaling greater heights in the pursuit of success.

Dr. Nachamai M

Assosiate Professor

Christ University

QUANTUM COMPUTING

Quantum Computing - A Prologue

"The number of transistors on a microprocessor continues to double every 18 months, the year 2020 or 2030 will find the circuits on a microprocessor measured on an atomic scale" states the Moore's Law. The next logical step in this path is "Quantum Computing". The word "Quantum" comes from Latin language, meaning 'amount', the word is from the branch of physics stating that the smallest amount of a physical quantity that can exist independently.

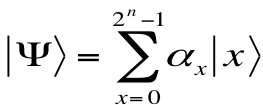

"The number of transistors on a microprocessor continues to double every 18 months, the year 2020 or 2030 will find the circuits on a microprocessor measured on an atomic scale" states the Moore's Law. The next logical step in this path is "Quantum Computing". The word "Quantum" comes from Latin language, meaning 'amount', the word is from the branch of physics stating that the smallest amount of a physical quantity that can exist independently. This exact meaning cannot be carried as it is, to the field of computer science. Everybody is aware that all computers starting from the Turing machine work with bits. Each bit can have two states a 0 or a 1. The quantum computers work with quantum bits or Qubits, that is not limited to two states, which exists in superposition. Qubits can be a 0, 1 or both. Superposition is the ability to exist in multiple states at the same time. Since quantum computers can work with multiple states simultaneously they are able to inherent parallelism. Quantum computers are devices made for complex massive data computations. The state of a qubit |Ψ| can be thought of as a vector in a two-dimensional Hilbert Space, H2, spanned by the Basis vectors 0 and 1. Unlike a classical bit, though, a qubit can be in any linear combination of these states: | Ψ | = α 0 + β 1, where α and γ are complex numbers. If the q-bit is measured it will be found in state 0 or state 1 with probabilities |α|2 or |γ|2, and the system will then be left in the state 0 or 1. What can be done with single Qubits is generally very limited. For more ambitious information, processing multiple bits must be used and manipulated. If we have n qubits, we can think of them as representing the binary expression for a n-bit integer between 0 and 2n-1.

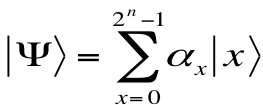

The most general state of n qubits can be written as

This key is the product of any two large prime numbers. This large number to be factorized is a difficult task for a hacker, so the credit card number and bank information is safe. Quantum computing technology will only continue to improve. At the moment, it is impossible even to predict what technology will win out in the long term. This is still science but it may become technology sooner than we expect. Theory also continues to advance. Various researchers are actively looking for new algorithms and communication protocols to exploit the properties of quantum systems. Quantum computers can also be used to efficiently simulate other quantum systems. Perhaps someday quantum computers will be used to design the next generation of classical computers. The quantum revolution is already started on its way, and the possibilities that lie ahead are limitless.

This exact meaning cannot be carried as it is, to the field of computer science. Everybody is aware that all computers starting from the Turing machine work with bits. Each bit can have two states a 0 or a 1. The quantum computers work with quantum bits or Qubits, that is not limited to two states, which exists in superposition. Qubits can be a 0, 1 or both. Superposition is the ability to exist in multiple states at the same time. Since quantum computers can work with multiple states simultaneously they are able to inherent parallelism. Quantum computers are devices made for complex massive data computations. The state of a qubit can be thought of as a vector in a two-dimensional Hilbert Space, H2, spanned by the Basis vectors 0 and 1. Unlike a classical bit, though, a qubit can be in any linear combination of these states: , where a and b are complex numbers. If the q-bit is measured it will be found in state 0 or state 1 with probabilities |a|2 or |b|2, and the system will then be left in the state 0 or 1. What can be done with single Qubits is generally very limited. For more ambitious information, processing multiple bits must be used and manipulated. If we have n qubits, we can think of them as representing the binary expression for a n-bit integer between 0 and 2n-1. The most general state of n qubits can be written

Jimsun George

Alumni

Christ University

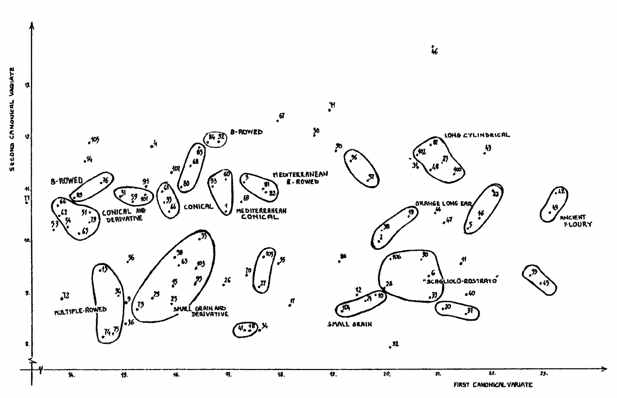

NTSYS, NUMERICAL TAXONOMY

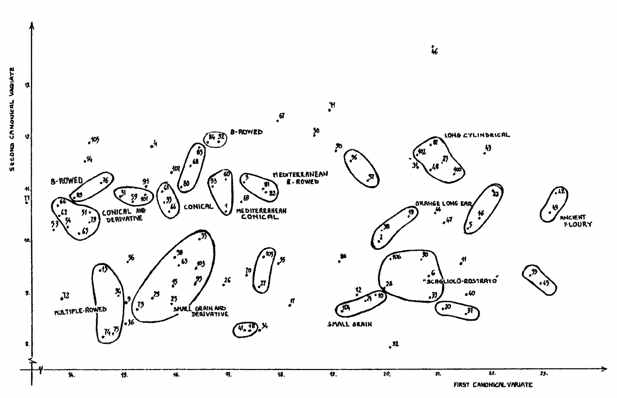

Enormously large data dump is what the scenario is in the present business intelligence world. A steep filter of fields is required to understand and review the data. NTSYS is a software that is used to discover pattern and structure in a multivariate data. This means that de-normalized data bulk also seems to make sense. A sample of data points suggests that the samples may have come from two or more distinct populations or to estimate a phylogenetic tree using the neighbor-joining or UPGMA methods for constructing Dendrograms, therefore creating an interface for the user to render a high level overview of the data itself. Of equal interest, the discovery that the variation in some subsets of variables are highly inter-correlated.

1. Here in NTSYS the correlation, distance, 34 association coefficients, and 11 genetic distance coefficients can be similarities and dissimilarities.

2. The UPGMA and other hierarchical SAHN methods can be clustered allowing for ties. Neighbor-joining method and several types of consensus trees can be applicable.

3. Minimum-length spanning trees, Graphs (un-rooted trees) from the neighbor-joining method can have a simpler meaning and an easy approach to go about with.

4. Ordination of principal components & principal co-ordinates analysis, correspondence analysis, metric & non-metric multidimensional scaling analysis, singular-value decompositions, projections onto axes and Burnaby's method can be analyzed. Canonical variates analysis, Programs for multiple factor analysis, common principal components analysis, partial least-squares, multiple correlation, and canonical correlations are also included and can be solved.

1. Here in NTSYS the correlation, distance, 34 association coefficients, and 11 genetic distance coefficients can be similarities and dissimilarities.

2. The UPGMA and other hierarchical SAHN methods can be clustered allowing for ties. Neighbor-joining method and several types of consensus trees can be applicable.

3. Minimum-length spanning trees, Graphs (un-rooted trees) from the neighbor-joining method can have a simpler meaning and an easy approach to go about with.

4. Ordination of principal components & principal co-ordinates analysis, correspondence analysis, metric & non-metric multidimensional scaling analysis, singular-value decompositions, projections onto axes and Burnaby's method can be analyzed. Canonical variates analysis, Programs for multiple factor analysis, common principal components analysis, partial least-squares, multiple correlation, and canonical correlations are also included and can be solved.

Enormously large data dump is what the scenario is in the present business intelligence world. A steep filter of fields is required to understand and review the data. NTSYS is a software that is used to discover pattern and structure in a multivariate data. This means that de-normalized data bulk also seems to make sense. A sample of data points suggests that the samples may have come from two or more distinct populations or to estimate a phylogenetic tree using the neighbor-joining or UPGMA methods for constructing Dendrograms, therefore creating an interface for the user to render a high level overview of the data itself. Of equal interest, the discovery that the variation in some subsets of variables are highly inter-correlated.

Jonathan Fidelis Paul

MCA-I

Christ University

THE SOFT WORLD

Ever wondered who you are? Ever thought why you are here, and what are you supposed to do? Are we for real or just an image in motion, as proposed by physicists of our time, who say that the entire universe can be seen as a two-dimensional structure painted on a cosmological horizon. In simple terms, just as the complete information of a holographic source can be recovered from just a fragment of it; we can do the same for our universe.

We are presently in a "Digital Age", where information whose residence was restricted to only neurons and impressed-surfaces, is now being encoded into almost anything and everything. The best example is storage of information in cassettes and hard disks (which have made the former almost obsolete!). But we did not stop here. In our quest to devour the knowledge and possibilities that the universe has to offer, we have tried to mimic our creation. Yes that's true. We are transcending from this age to another; to that of Artificial Intelligence. After becoming potent to store information, we are trying to generate and manipulate it automatically. Several break-through innovations have been made, ranging from the elementary perceptron, to the most recent algorithm to recognise objects by a computer without human-intervention. That's in the realm of software. But hardware is nowhere behind. From the abacus, to the vacuum tubes, to finally the minute integrations on chips; we have reached an age where a robotic fly can be set on an espionage mission.

Robotic pets are already in the market, prosthetic machines are already in use, and humanoids are in the making. Man has attempted to link the brain to machines and succeeded. All this is the result of our attempt to reshape nature, fuelled by our never dying curious-thirst.

We will soon be living with a new species. That of artificially intelligent beings who can out do us. What we lack "being humans", they will possess. But will this new population grow intelligent enough to defy their creators. Would the fruit from the tree of knowledge, be plucked again? Why not? If man could disobey God, what would prevent these new beings from disobeying us?

Come what may, and we will find the solution to it. That's what defines us. Nature has always been on our side. We just need to understand it better, for we live in a soft-world. A place which emanates information relative to our perception.

But why discuss all this? Believe it or not, consciously or subconsciously, we are manipulating this information every day. The elementary particles like quarks, leptons, etc. that have given rise to this universe go unnoticed by us. But does every one miss it? Probably not! Using nature as an inspiration, man, from time immemorial has tried to develop various machines and tools, which can ease their work. Be it the wheel, the comprehension of zero, the ancient automatons (Antikythera mechanism: earliest known as analogue computer), the pendulum clock, break-through in atomic physics (E=mc2), formulation of logic or devising the wonderful tool named calculus; all have boosted progress in our effort to get closer to know the universe.

Mannu Nayyar

II - MCA

Christ University

TOP 5 BLOGS

TOP 5 WEBSITES

TOP 5 APP'S

TOP 5 BLOGS

1. lifehacker.co.in

The blog posts cover a wide range of topics including: Microsoft Windows, Mac, Linux programs, iOS and Android, as well as general life tips and tricks.

2. Joy of programming

http://zingprogramming.blogspot.in

The blog give insight to various concepts in UNIX, Data Structures and the details of various coding events conducted at Christ University.

3. I Unlock Joy

www.microsoft.com/india/

developer/windowsphone/

I Unlock Joy brings an opportunity for application developers and students to win a Windows Phone. Build apps and get a Windows Phone.

4. www.addictivetips.com

AddictiveTips is a tech blog focused on helping users find simple solutions to their everyday problems. We review the best desktop, mobile and web apps and services out there, in addition to useful tips and guides for Windows, Mac, Linux, Android, iOS and Windows Phone.

5. http://blog.laptopmag.com/

The blog gives latest trends and news about smartphones, laptops and technology. Along with that the user can search for suitable laptops and smartphones according to their preferences.

TOP 5 APPS

1. Videoder:

Videoder is an awesome Video Download Utility that lets you search and download SHARED VIDEOS from internet.

2. Sygic _ A Navigation App

3. iOnRoad - Augmented Reality Driving

The iOnRoad App provides a range of personal driving assistance functions including augmented driving, collision warning and "black-box" like video recording.

4. Flipboard

The app turns your news, Twitter and Facebook feeds into your own personal magazine, and makes it easy to discover new content through its curated channels focused on everything from tech to fashion.

5. Mathway

Mathway is a problem solving resource that solves problems in Basic Math, Pre-Algebra, Algebra, Trigonometry, Statistics, Linear Algebra, etc.

TOP 5 WEBSITES

1. http://www.wix.com

Wix.com is a cloud-based web development platform that allows users to create HTML5 websites and mobile sites.

2. http://www.wolframalpha.com/

Wolfram|Alpha introduces a fundamentally new way to get knowledge and answers—

not by searching the web, but by doing dynamic computations based on a vast collection of built-in data, algorithms, and methods.

3. https://google.com/settings/takeout

Google Takeout is a project by the Google Data Liberation Front that allows users of Google products, such as YouTube and Gmail, to export their data to a downloadable ZIP file.

4. https://www.udacity.com/

Freemium MOOCs(Massive Open Online Courses).

5. http://www.extremetech.com/

One-stop-shop for serious technological needs. If you need to know how the disks grind and how the chips hum, you're at the right place.

6. http://www.zdnet.com

ZDNet brings together the reach of global and the depth of local, delivering 24/7 news coverage .

Dimple Mathew

I - MCA

Christ University

COMPOSICIO'N

SOFTWARE PIRACY

It was a time when Stealing was Crime.

There were days when Cheating was disgrace .

Time is Changing . Time is Moving.

Changed to an Extend .

When theft is not Condemned.

Piracy is a mere Worm .

A disgrace to the Society .

A disgrace to the world of software .

A disgrace to one's own country.

What is piracy do you know it?

But you still commit it

People Infringes copyrights of downloading.

You about it but you still commit it

People we have to desolations

"FOR SOME PEACE IS NOT THE ABSENCE OF VIOLENCE : BUT THE PRESENCE OF PEACE"

It's a moral duty to change our society

There's always been a silver-lining to save us.

Its a morning for Us to change.

Time is on our side so let us change

SO LET US CHANGE!!!!!

Software Piracy is Illegal, unethical and immoral

An example for Anti-Piracy Warning

Pema Gurung

I - MCA

Christ University

LIFE IS A COMPUTER SYSTEM

Processor is like your Parents,

As they control you.

Hard disk is your friends,

They are always there when are in need.

Virus is your enemy,

They always block your way.

Antivirus is your doctor,

They always cure you.

Firewall is your security guard,

They don't allow unknown people in.

WI-FI is like new friends,

The moment you turn it on, you get new connection.

User is you yourself.

Que la composicio'n

Apoorva

III - MCA

Christ University

LIFE WITH COMPUTERS

First and foremost, it is always beneficial to have good examples of movement if you are a visual learner. If a teacher tells you to step to the right and turn to the left, and you absorb things visually, then you may struggle with the direction provided to you. However, if you can see a sort of "blueprint" of the move on your computer screen, it can help you understand the choreography more clearly.

Even if you have a good understanding of how your body should move, animation can help jumpstart your memory before a rehearsal. It is always beneficial to Visually review choreography before going to class, and if you have a cartoon representation of two figures going through certain steps, it can save you the trouble of having to relearn something you otherwise wouldn't have forgotten.

As an upcoming programmer not really interested in the traditional ways of corporate based project oriented programming, A deep understanding of movements , angles, distance, an ability to make quick n necessary dynamic changes and an ability to communicate the requirements and analyse them deeply will help in developing a good design. Inspired by unix philosophy- "do one program and do it right".

As an upcoming programmer not really interested in the traditional ways of corporate based project oriented programming, A deep understanding of movements , angles, distance, an ability to make quick n necessary dynamic changes and an ability to communicate the requirements and analyse them deeply will help in developing a good design. Inspired by unix philosophy- "do one program and do it right".

Finally, as a creator of dance, choreographers often envision things as appearing one way, when they look quite different in reality if dancers execute them.

Animated steps can remove some of the mystery, giving you the ability to input what you hope a dance will look like, and see it played out before you pass on the artistry to your student. An app which allows choreographers to design dances with animated figures and diagrams can be both creative and innovative. Using these animations, a dancer can map out each and every step of choreography before even entering the studio, reducing stress and confusion at practice. Some dancers can save literally hours of rehearsal time by taking advantage of these input animations before taking them into the studio.. One of the best features of such dance software is the ability to upload video recordings of your actual dancers in rehearsal, and then convert them into animations so that you can look at the moves, switch things around, and really decide what works best.

Life is way too short to bore ourselves with a job that is devoid of one's passion. Always add a pinch of your own personality and character to your work. Such life is what we call "worth living".

A Dance and computer programming may seem like two distant worlds with absolutely nothing in common. But programming has such varied applications and trying to expand its horizon and combining it with different fields which is really interesting. Combining technology and hobby can give interesting results. I have more than 4 years stage level experience in the performing arts field.

Currently pursuing my studies in the programming field, the challenge is to combine both the fields to realise where exactly it collides. One such field is animation.

The whole concept of animated dance steps may seem odd and out of the ordinary when you are used to taking traditional ballroom dance classes or learning from physical rehearsal or reading a written description of steps. However, using animation has many advantages.

Aneesh

II -MCA

Christ University

MOONWALKER ONE

HE WAS PRONE TO MOTION SICKNESS.HE ONCE MISJUDGED THE ALTITUDE WHILE

LANDING A PLANE. HIS APPLICATION TO BE AN ASTRONAUT WAS LATE. BUT PROVIDENCE MUST HAVE A SENSE OF HUMOUR, BECAUSE NONE OF THESE STOPPED NEIL ARMSTRONG FROM BECOMING THE FIRST MAN OF THE MOON.

Armstrong's first space flight took place on Gemini 8 in 1966, five years after Yuri Gagarin became the first man to travel into outer space. As command pilot of Gemini 8, Armstrong and pilot David Scott were responsible for the very first docking between two space vehicles. However because the docked spacecraft started to roll, the mission had to be aborted before some of its objectives could be met. Nevertheless, it was a milestone in NASA's space programme. Lessons learned from it enabled proper control of docked vehicles, crucial when astronauts eventually were sent to the Moon. Having flown in combat, Armstrong's courage was never doubted. Yet, understanding fully the risk of space travel, his joy must have been tempered with trepidation when he was appointed commander of Apollo 11.This was to be America's first attempt to land a manned vehicle on the moon.

Many boys grow up dreaming of becoming astronauts. But when Neil Alden Armstrong was born on 5 August 1930, space travel was but a distant dream- the word 'astronaut' had just been coined. Two years into his aeronautical engineering course at PurDue University, Armstrong was called up for military service as a naval aviator. He saw action in the Korean war and returned with several medals. He eventually finished college, and years later got a Master's degree in aerospace engineering from the University of Southern California. He was selected to be a member of the astronaut corps, despite the fact that his application arrived past deadline. As fate would have it, a former colleague saw it and sneaked it into the stack. Armstrong had stated publicly that he believed there was only a 50 percent chance of landing on the Moon. Nevertheless, he not only proceeded to prepare for the flight with dedication, but he also maintained his sense of humour. It was his character-modest and cautious- that led NASA management to choose Armstrong to be the first to step onto the moon. During the launch of Apollo 11, Armstrong's heart raced to 109 beats a minute.

At 2:56 UTC on 21st July 1969, Armstrong set his left boot on the Moon's surface, watched by a global television audience of 500 million(a figure only surpassed when Prince Charles and Princess Diana Wed).

Armstrong then uttered the immortal words:

"That's one small step for man, one giant leap for mankind."

Name of author

Jonathan Fidelis Paul

I MCA

Christ University

KINGO

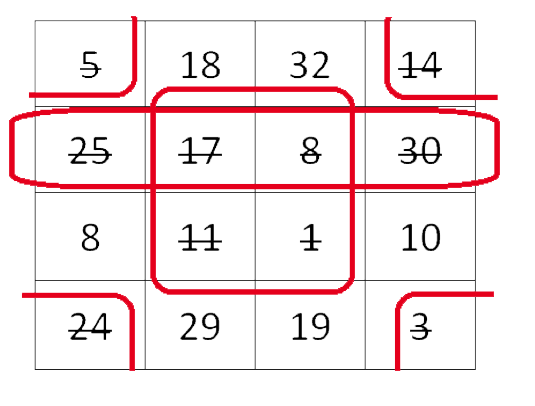

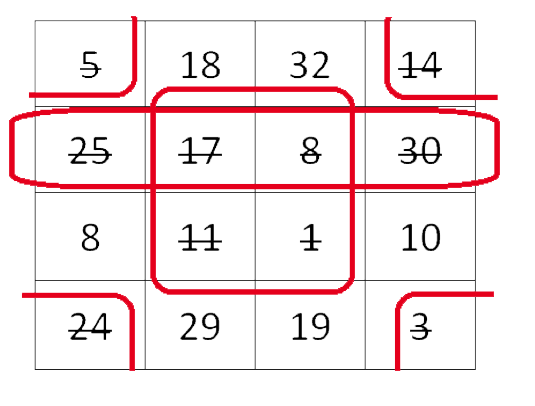

RULES FOR THE GAME:

1. Make a grid of nxn.

2. Numbers (N) need to be filled into the grid.

3. N ε [ 1 , n 2*2]

4. Chances per player = n2/( no. of players). Take closest integer less than, if fraction results.

5. Players will alternatively call out a number of their choice and mark it in their grid.

6. After all chances are completed, the marked numbers will be considered as 1 and the unmarked as 0 (as in the SOP form).

7. The K-map is then solved, and the player with the best solution (i.e. using the rules of solving a k-map). For example, though applied on different maps, but, an octet is always better than two quads in this game.)

8. So we have to achieve

i) Maximum grouping.

ii) Minimum groups.

So for example, we make a grid of 4x4.

Then Numbers (N) to be filled inside the grid (one number per cell) will range from 1 to 42*2(=32).

Also , if 2 players are playing then number of chances per player would be 8 (= 42/2).

Here as we can see after all calls the marked numbers are treated as a 1 and the unmarked as a 0.

Then the grid is solved as a K-map. After solving, the player with the maximum grouping and minimum groups wins.

For eg. Here we have 3 Quads.

If another player has 2 Quads and 2 Pairs , then the 1st player will be declared winner.

Have you ever played Bingo?

No?

But you surely might have solved a Karnaugh map, right?

Here's a game which takes a little from both and adds a tweak of its own.

It's KINGO.

Ashish N Koushik

I - MCS

Christ University

MOBILE OS

A glimpse at the industry of mobility softwares

In 2007

-The first iOS from Apple was introduced with a brand name iPhone. It's a closed source and a proprietary software built on Darwin core OS, on a ARM CPU architecture. The latest iOS version is believed to be iOS8 which will be released in the year 2014.

In 2008

-OHA(Open handset Alliance) released Android OS (Google Inc) which was free and open source, on ARM, MIPS and x86 platforms, which is currently capturing 84% (as of feb,2014) of the world's mobile market. Latest version of android is 5.0 codenamed L .

In 2009

-Samsung introduced Bada OS which was a mix of both open source and proprietary technologies. Samsung discontinued Bada development and moved to Tinzen OS.

In 2010

-Microsoft came up with a Windows Mobile OS on Windows Phone working on ARM platform. Its a proprietary software and the latest version is Windows NT 8.1(2014) for Mobile devices.

In 2011

-MeeGo , a collaborative achievement of Nokia and Intel introduced the first mobile native linux Distro which was discontinued in Sept 2011 in favour of Tizen OS.

In 2012

,Mozilla introduced the first Firefox OS which run's on ARM, x86 and x64 platforms which is natively programmed in HTML5, CSS, JS and C++ .

-Tizen was introduced which is a closed source distro which runs on ARM and x86 platforms, still in beta stages it is expected to be released in the next year (2015).

In 2013

-Canonical introduced the ubuntu touch a version of Linux distro exclusively for mobile devices which run on ARM and x86 platforms and is natively programmed in HTML5 (Unity Web API) and native C,C++ and QML.

-Sailfish OS developed by Jolla which runs on ARM, x86_64 platform is an open source pure native linux distro for mobile devices.

With emerging technologies and newer mobile devices it is suggested to explore the limits of these ARM devices with newer operating systems.

Before even smartphones were introduced, embedded systems was the key platform for mobile devices, which not just lacked with a graphical interface but also with limited functionality. In 1973 John.F.Mitchell and Dr.Martin cooper demonstrated the first handheld cell phone which weighed around 2 kilograms. But over the years the need for mobile devices increased rapidly which led to the very first smartphone developed by IBM Simon in '93 which had touchscreen, email and a personal assistant. Accordingly the market was flooded with new emerging devices with proprietary softwares and a few open source OS's as well.

In '99 NOKIA came out with its revolutionary symbian OS which was incorporated in the launch of ericsson handset's becoming a flagship device for modern mobile OS.Over the past decade multiple operating systems were introduced by several companies but we are familiar only with a few of those.

The very first few OS's that strikes our mind are iOS, Android, Windows and Blackberry. There are over 15 fully functional operating systems built on several platforms over the past decade and here are a few :

Rupen Paul

II - MCA

Christ University

QUANTUM CRYPTOGRAPHY

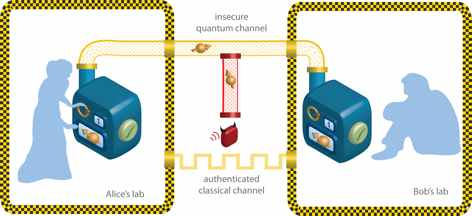

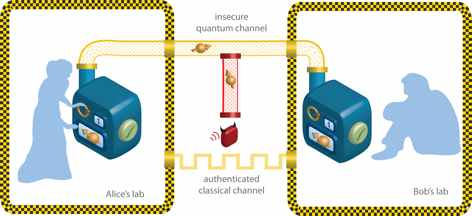

Though the whole concept of quantum cryptography may seem highly complex as well as expensive, it is highly relevant if not indispensable in the field of cryptography. Because Qbits can exist as a zero and a one, we can perform many computations at the same time. In other words quantum cryptography provides us with machinery for performing complex cryptographic computations that are otherwise expensive for a classical computer. This feature of quantum cryptography is of great use as it helps us break codes such the RSA.

Apart from providing immense computational abilities in breaking codes such as the RSA, quantum cryptography also provides a way for an efficient key distribution mechanism called the Quantum Key Distribution or QKD. Using QKD two parties Alice and Bob can exchange keys very securely without the threat of an eavesdropper. Even if an eavesdropper intercepts and changes the message being passed, an error will be introduced in the message and thus the presence of an eavesdropper will be detected.

This method of key distribution has mathematical proven accuracy. Even though the field of Quantum Cryptography is an exciting field there are lots of limitations that hinder the growth of this field. For one this field uses quantum computers which are expensive to design, build and maintain. Another problem is the lack of a smooth affordable optical medium which will transmit photons from a sender to a receiver.

Quantum Cryptography is an up-coming area of research in the field of Computer Science. To understand the importance of whole idea of quantum cryptography, one should briefly know the importance of cryptography in detail. Cryptography is the science as well as art of maintaining the secrecy of messages. Cryptography has been in use and development since the time of emperors like Caesar. Of late cryptography has been in rapid usage and development due to the technology revolution. The widespread usage of technology has led to information that is exclusive to certain organizations. In other words information that is meant for one organization should not be accessed by any other organizaon.

Traditionally codes are realized using classical bits being either a one or a zero. But in quantum cryptography codes are realized using quantum bits. A quantum bit can exist as both a zero and a one at the same time as well as zero or a one independently. It is called a superposition. Quantum bits or Qbits as they are referred to only operate on Quantum Computers which are very small and such quantum computers require highly sophisticated cooling mechanism such as liquid helium cooling.

Pawar Valent

III - MCA

Christ University

LUHN's ALGORITHM

Each credit card has a specific standard in term of its size, and numbering method, .i.e. decided by the International Standards Organization- ISO/IEC7812-1:1993 and the American National Standards Institute ANSI X4.13. This credit card numbers are divided in various parts which in turn combined together makes a single card number and then from which the check number is generated. The Major Industry Identifier- MII digit represents different credit card issuer categories, followed by Issuer Identifier which including the MII digit is a 6 digit number, and this is done by the specify standard defined in ISO 3166 and differs in every country. For every credit card number part of it is the individual account identifier. The final digit of the credit card number is a check digit. Now the maximum length of a credit card number is 19 digits, and since the initial 6 digits of a credit card number are the issuer identifier and the final digit is the check digit this mean that the maximum length of the account number filed is 19-7=12 digits, so the possible account numbers is 10 raised to 12th power or 1,000,000,000,000.

Here the term "valid" means "mathematically valid", a card that is mathematically valid does not mean it is working, as it does not validate the expiry date and card security code (CVV, CVC). Luhn's algorithm detects any single digit error i.e. adjacent digit transpositions. Transpositions such as "09" to "90" and "22-55" "33-66" "44-77". The extension of this Luhn's algorithm, Luhn mod N algorithm supports non-numerical strings also.

Luhn Algorithm can be used to generate randomly credit card numbers. But possibility of tracking any credit card account is minimal as CVV or CVV2 add as an extra feature to the security of the card.

Luhn's Algorithmn- Hans Peter Luhn, patent No. 2,950,048 filed on January 6, 1954 and granted on August 23, 1960. "For a card with an even number of digits, double every odd numbered digit and subtract 9 if the product is greater than 9. Add up all the even digits as well as the doubled - odd digits, and the result must be a multiple of 10 or it's not a valid card. If the card has an odd number of digits, perform the same addition doubling the even numbered digits instead."" This method also known as "Mod 10" method.

Each credit card has a specific standard in term of its size, and numbering method, i.e. decided by the International Standards Organization- ISO/IEC7812-1:1993 and the American National Standards Institute ANSI X4.13. This credit card numbers are divided in various parts which in turn combined together makes a single card number and then from which the check number is generated. The Major Industry Identifier- MII digit represents different credit card issuer categories, followed by Issuer Identifier which including the MII digit is a 6 digit number, and this is done by the specify standard defined in ISO 3166 and differs in every country. For every credit card number part of it is the individual account identifier. The final digit of the credit card number is a check digit. Now the maximum length of a credit card number is 19 digits, and since the initial 6 digits of a credit card number are the issuer identifier and the final digit is the check digit this mean that the maximum length of the account number filed is 19-7=12 digits, so the possible account numbers is 10 raised to 12th power or 1,000,000,000,000.

Sona Mary Francis

II - MCS

Christ University

Steganography

Since the rise of the Internet, security of information has been one of the most important factors of information technology and communication. Cryptography was created as a technique for securing the secrecy of communication and many different methods have been developed to encrypt and decrypt data in order to keep the message secret. Unfortunately it is sometimes not enough to keep the contents of a message secret, it may also be necessary to keep the existence of the message secret. The technique used to implement this, is called steganography.

The word steganography is derived from the Greek words "stegos" meaning "cover" and "grafia" meaning "writing" defining it as "covered writing". In image steganography the information is hidden exclusively in images.

In Histories the Greek historian Herodotus writes of a nobleman, Histaeus, who needed to communicate with his son-in-law in Greece. He shaved the head of one of his most trusted slaves and tattooed the message onto the slave's scalp. When the slave's hair grew back the slave was dispatched with the hidden message. In the Second World War the Microdot technique was developed by the Germans. Information, especially photographs, was reduced in size until it was the size of a typed period. Extremely difficult to detect, a normal cover message was sent over an insecure channel with one of the periods on the paper containing hidden information. Today steganography is mostly used on computers with digital data being the carriers and networks being the high speed delivery channels.

steg

Steganography is the art of hiding the fact that communication is taking place, by hiding information in other information. Many different carrier file formats can be used, but digital images are the most popular because of their frequency on the Internet. For hiding secret information in images, there exists a large variety of steganographic techniques some are more complex than others and all of them have respective strong and weak points. Different applications have different requirements of the steganography technique used. For example, some applications may require absolute invisibility of the secret information, while others require a larger secret message to be hidden. With fingerprinting on the other hand, different, unique marks are embedded in distinct copies of the carrier object that are supplied to different customers.

Image steganography :

Images are the most popular cover objects used for steganography. In the domain of digital images many different image file formats exist, most of them for specific applications. For these different image file formats, different steganographic algorithms exist. Image steganography techniques can be divided into two groups: those in the Image Domain and those in the Transform Domain. Image (spatial) domain techniques embed messages in the intensity of the pixels directly, while for transform (frequency) domain, images are first transformed and then the message is embedded in the image. Image domain techniques encompass bit-wise methods that apply bit insertion and noise manipulation and are sometimes characterised as "simple systems". Steganography in the transform domain involves the manipulation of algorithms and image transforms .These methods hide messages in more significant areas of the cover image, making it more robust. Many transform domain methods are independent of the image format and the embedded message may survive conversion between lossy and lossless compression.

Nisha Singh

III MCA

Christ University

GRID COMPUTING

The early efforts in Grid computing started as a project to link supercomputing sites, but have now grown far beyond their original intent. In fact, many applications can benefit from the Grid infrastructure, including collaborative engineering, data exploration, high-throughput computing, and of course distributed supercomputing. Moreover, due to the rapid growth of the Internet and Web, there has been a rising interest in Web-based distributed computing, and many projects have been started and aim to exploit the Web as an infrastructure for running coarse-grained distributed and parallel applications. In this context, the Web has the capability to be

a platform for parallel and collaborative work as well as a key technology to create a pervasive and ubiquitous Grid-based infrastructure.

"Grid problem," which is defined as flexible, secure, coordinated resource sharing among dynamic collections of individuals, institutions, and resources -also referred as virtual organizations. In such settings, it's common to encounter unique authentication, authorization, resource access, resource discovery, and other challenges. It is this class of problem that is addressed by Grid technologies. An extensible and open Grid architecture, is one in which protocols, services, application programming interfaces, and software development kits are categorized according to their roles in enabling resource sharing.

Grid computing, most simply stated, is distributed computing taken to the next evolutionary level. The goal is to create the illusion of a simple yet large and powerful self managing virtual computer out of a large collection of connected heterogeneous systems sharing various combinations of resources.It's important to have a compact set of inter-grid protocols to enable interoperability among different Grid systems. rid technologies relate to other contemporary technologies, including enterprise integration, application service provider, storage service provider, and peer-to-peer computing.

Grid concepts and technologies complement and have much to contribute to these other approaches.

The standardization of communications between heterogeneous systems created the Internet explosion. The emerging standardization for sharing resources, along with the availability of higher bandwidth, are driving a possibly equally large evolutionary step in grid computing.

The last decade has seen a substantial increase in commodity computer and network performance, mainly as a result of faster hardware and more sophisticated software. Nevertheless, there are still problems, in the fields of science, engineering, and business, which cannot be effectively dealt with using the current generation of supercomputers. In fact, due to their size and complexity, these problems are often very numerically and/or data intensive and consequently require a variety of heterogeneous resources that are not available on a single machine. A number of teams have conducted experimental studies on the cooperative use of geographically distributed resources unified to act as a single powerful computer. This new approach is known by several names, such as metacomputing, scalable computing, global computing, Internet computing, and more recently Grid computing. Grid computing has emerged as an important new field.

Tapasya

II MCS

Christ University

Software Innovations

In 1951, Maurice Wilkes, Stanley Gill, and David Wheeler developed the concept of subroutines in programs to create re-usable modules that formalized the concept of software development. Alick E. Glennie wrote "Autocoder", was written in 1952, which translated symbolic statements into machine language for the Manchester Mark I computer. Auto-coding became a common term for assembly language programming. Next came the idea of compilers by Grace Murray Hopper who described techniques to compile pre-written code segments written in a high level language. She later focused on developing COBOL. A predecessor of the compiler concept was developed by Betty Holberton in 1951, who developed a "sort-merge generator". A very important principle came up in 1955 by Frierich L. Bauer and Klaus Samelson called "stack principle" at the Technical University Munich. This served as the basis for compiler construction in data structures.

In 1960 Packet-Switching Network was a big achievement in the area of computer networks. In the same year, C. A. R. (Tony) Hoare developed the Quicksort algorithm, which was eventually published in 1961. Sorting is an extremely common operation, and this algorithm. In 1964 the first "word processor" by IBM, which combined the features of the electric typewriter with a magnetic tape drive was introduced. For the first time, typed material was edited. In 1967 Object-oriented (OO) programming was introduced to the world by the Norwegian Computing Centre's Ole-Johan Dahl and Kristen Nygaard when Simula 67 was released. OO programming is later popularized in Smalltalk-80, C++, Java, and C#. This approach served as the backbone of the graphical user interfaces became widely used.

In 1970s E.F. Codd introduced the relational model and relational algebra in a famous article in the Communications of the ACM. This is the theoretical basis for relational database systems and their query language, SQL. The first commercial relational database, the Multics Relational Data Store (MRDS), was released in June 1976. The early 1980s, DARPA encouraged the development of TCP/IP implementations for many systems. After TCP/IP had become wildly popular, Microsoft added support for TCP/IP to Windows . In 1982, a computer virus that is a program infecting other programs by modifying them to include a possibly evolved copy of it was investigated. While not a positive development, this was certainly an innovation. The program "Elk Cloner" is typically identified as the first "in the wild" computer virus. In 1994 The World Wide Web Worm (WWWW) indexed 110,000 web pages through web search engines.

In the words of Bill Gates Software innovation, like almost every other kind of innovation, requires the ability to collaborate and share ideas with other people, and to sit down and talk with customers and get their feedback and understand their needs. The era of innovation began in 1837 when Charles Babbage the renowned scientist gave , an idea of an analytical engine, a mechanical device that takes instructions from a program instead of being designed to do only one task. Unfortunately, Babbage's dream of building it couldn't come true till general-purpose computers were built.

In 1945, "An Investigation of the Laws of Thought" by George Boole was published, this served as the basis for symbolic and logical reasoning which became the basis of computing. In 1936 the idea of Turing machines came up when Alan Turing wrote his paper "On computable numbers, with an application to the Entscheidungs problem", where he first describes Turing Machines. This mathematical construct showed the strengths and limitations of computer software. In 1945, "First Draft of a Report on the EDVAC", the concept of storing a program in the same memory as data was illustrated by John von Neumann. This builds the fundamental concept for software manipulation that all software development is based on.

Santosh

II MCS

Christ University

Li - Fi [Light Fidelity]

Researchers at the Heinrich Hertz Institute in Berlin, Germany ,have reached data rates of over 500 megabytes per second using a standard white-light LED. The technology was demonstrated at the 2012 Consumer Electronics Show in Las Vegas using a pair of Casio smart phones to exchange data using light of varying intensity given off from their screens, detectable at a distance of up to ten meters. In October 2011 a number of companies and industry groups formed the Li-Fi Consortium, to promote high-speed optical wireless systems and to overcome the limited amount of radio based wireless spectrum available by exploiting a completely different part of the electromagnetic spectrum. The consortium believes it is possible to achieve more than 10 gbps, theoretically allowing a high-definition film to be downloaded in 30 seconds. This brilliant idea was first showcased by Harold Haas from University of Edinburgh, UK, in his TED Global talk on VLC.

He explained," Very simple, if the LED is on, you transmit a digital 1, if it's off you transmit a 0. The LED's can be switched on and off very quickly, which gives nice opportunities for transmitting data." We have to just vary the rate at which the LED's flicker depending upon the data we want to encode. Further enhancements can be made in this method, like using an array of LED's for parallel data transmission, or using mixtures of red, green and blue LED's to alter the light's frequency with each frequency encoding a different data channel. Such advancements promise a theoretical speed of 10 gbps meaning you can download a full high-definition film in just 30 seconds. Simply awesome! But blazingly fast data rates and depleting bandwidths worldwide are not the only reasons that give this technology an upper hand. Since Li-Fi uses just the light, it can be used safely in aircrafts and hospitals that are prone to interference from radio waves.

This can even work underwater where Wi-Fi fails completely, thereby throwing open endless opportunities for military operations. Imagine only needing to hover under a street lamp to get public internet access, or downloading a movie from the lamp on your desk. Radio waves are replaced by light waves in a new method of data transmission which is being called Li-Fi. Light-emitting diodes can be switched on and off faster than the human eye can detect, causing the light source to appear to be on continuously. Light-emitting diodes (commonly referred to as LED's and found in traffic and street lights, car brake lights, remote control units and countless other applications) can be switched on and off faster than the human eye can detect, causing the light source to appear to be on continuously, even though it is in fact 'flickering'. This invisible on-off activity enables a kind of data transmission using binary codes: switching on an LED is a logical '1', Switching it off is a logical '0'.

Li-Fi is transmission of data through illumination by taking the fiber out of fiber optics by sending data through a LED light bulb that varies in intensity faster than the human eye can follow. Li-Fi is the term some have used to label the fast and cheap wireless-communication system, which is the optical version of Wi-Fi. The term was first used in this context by "Harold Haas" in his TED Global talk on Visible Light Communication. "At the heart of this technology is a new generation of high brightness light-emitting diodes", says Harold Haas from the University of Edinburgh, UK,"Very simply, if the LED is on, you transmit a digital 1, if it's off you transmit a 0,"Haas says, "They can be switched on and off very quickly, which gives nice opportunities for transmitted data."It is possible to encode data in the light by varying the rate at which the LED's flicker on and off to give different strings of 1s and 0s. Other group are using mixtures of red, green and blue LED's to alter the light frequency encoding a different data channel.Li-Fi, as it has been dubbed, has already achieved blisteringly high speed in the lab.

Nisha Singh

III MCA

Christ University

Sap hana

The elimination of aggregates and relational table indices and the associated maintenance can greatly reduce the total cost of ownership.

As hardware advances are going by leaps and bounds, where even a mobile device now has quad-cores, seeing large core - RAM (2TB and upwards) machines in enterprise servers is now not only affordable but becoming common. Knowing these advances would happen, SAP early on has invested in building an in-memory database called SAP HANA.SAP HANA is a product, which behaves as a database for analytics

as well as transactions at the same time.

SAP HANA Appliance refers to HANA DB as delivered on partner certified hardware (see below) as an appliance. It also includes the modeling tools from HANA Studio as well as replication and data transformation tools to move data into HANA DB,

SAP HANA One refers to a deployment of SAP HANA certified for production use on the Amazon Web Services (AWS) cloud.

SAP HANA Application Cloud refers to the cloud based infrastructure for delivery of applications (typically existing SAP applications rewritten to run on HANA).

HANA DB takes advantage of the low cost of main memory (RAM), data processing abilities of multi-core processors and the fast data access of solid-state drives relative to traditional hard drives to deliver better performance of analytical and transactional applications. It offers a multi-engine query processing environment which allows it to supports relational data (with both row- and column-oriented physical representations in a hybrid engine) as well as graph and text processing for semi- and unstructured data management within the same system. HANA DB is 100% ACID compliant.

While HANA has been called variously an acronym for HAsso's New Architecture (a reference to SAP founder Hasso Plattner) and High Performance ANalytic Appliance, HANA is a name not an acronym.

SAP HANA enables you to perform real-time OLAP analysis on an OLTP data structure. As a result, you can address today's demand for real-time business insights by creating business applications that previously were neither feasible nor cost-effective.

SAP HANA, the newest in-memory database technology which is a product, which behaves as a database for analytics as well as transactions at the same time. In addition from a complex world of n-tier architecture, extreme requirements of high performance computing is also now a reality, working with SAP HANA in clustered modes is an ongoing research by SAP and they have recently build a 100TB system to validate the same.

SAP HANA is a completely re-imagined platform for real-time business and is the fastest growing product in SAP history.

HANA is SAP's newest database technology and perhaps the most far reaching development for SAP in this decade. The announcement of the SAP HANA platform has created a lot of buzz in the IT and business world.

As new business demands challenge the status quo, the scale is larger, expectations are greater, and the stakes are higher. New-breed IT systems must be able to evaluate, analyze, predict, and recommend – and do so in real time. An in-memory approach is the only way to tackle a real-time-data future that includes new data types such as social media monitoring and Web-automated sensors and meter readings.

Today's business users need to react much more quickly to changing customer and market environments. They demand dynamic access to raw data in real time. SAP HANA empowers users with flexible, on-the-fly data modelling functionality by providing non-materialized views directly on detailed information. SAP HANA liberates users from the wait time for data model changes and database administration tasks, as well as from the latency required to load the redundant data storage required by traditional databases.

Apoorva K.R

III MCA

Christ University

DIGITAL JEWELLERY

Let's look at the various components that are inside a cell phone:

Microphone

Receiver

Touchpad

Display

Circuit board

Antenna

Battery

IBM has developed a prototype of a cell phone that consists of several pieces of digital jewellery that will work together wirelessly, possibly with Bluetooth wireless technology, to perform the functions of the above components.

Here are the pieces of IBM's computerized jewellery phone and their functions:

Earrings - Speakers embedded into these earrings will be the phone's receiver.

Necklace - Users will talk into the necklace's embedded microphone.

Ring - Perhaps the most interesting piece of the phone, this "magic decoder ring" is equipped with light-emitting diodes (LEDs) that flash to indicate an incoming call. It can also be programmed to flash different colors to identify a particular caller or indicate the importance of a call.

Bracelet - Equipped with a video graphics array (VGA) display, this wrist display could also be used as a caller identifier that flashes the name and phone number of the caller.

With a jewellery phone, the keypad and dialing function could be integrated into the bracelet, or else dumped altogether -- it's likely that voice-recognition software will be used to make calls, a capability that is already commonplace in many of today's cell phones. Simply say the name of the person you want to call and the phone will dial that person. IBM is also working on a miniature rechargeable battery to power these components.

IBM's magic decoder rings will flash when you get a call. In addition to changing the way we make phone calls, digital jewellery will also affect how we deal with the ever-increasing bombardment of e-mail. Imagine that the same ring that flashes for phone calls could also inform you that e-mail is piling up in your inbox. This flashing alert could also indicate the urgency of the e-mail. In the next section, we will look at an IBM ring intended to change the way you interface with your computer.

There are technologies way beyond one's imagination. The key is to never limit your thoughts to only what is currently available . Let your chain of thoughts flow and take a shape of something that is beyond one's expectations. Give life to your thoughts , ideas and create and make this world a better place to live.

It's a mobile world out there. But very little is known about the Digital Jewellery. Soon, cell phones will take a totally new form, appearing to have no form at all. Instead of one single device, cell phones will be broken up into their basic components pieces of digital jewellery. Each piece of jewellery will contain a fraction of the components found in a conventional mobile phone, according to IBM. Together, the digital-jewellery cell phone should work just like a conventional cell phone.

Dismi Paul

II MCS

Christ University

Smart grid

The smart grid architecture model consists of five layers. They are Business layer, function Layer, Information Layer, Communication Layer and component Layer. The business layer represents the business view on the information exchange related to smart grids. The function layer describes functions and services including their relationships from an architectural viewpoint. The information layer describes the information that is being used and exchanged between functions, services and components. The emphasis of the communication layer is to describe protocols and mechanisms for the interoperable exchange of information between components in the context of the underlying use case, function or service and related information objects or data models.

The emphasis of the component layer is the physical distribution of all participating components in the smart grid context. The features of smart grid are reliability, flexibility in network topology, efficiency, load adjustments, Peak curtailment/leveling and time of use pricing, Sustainability, Market-enabling, Demand response support, Platform for advanced services and Provision megabits, control power with kilobits, sell the rest. The bulk of smart grid technologies are already used in other applications such as manufacturing and telecommunications and are being adapted for use in grid operations. In general, smart grid technology can be grouped into seven key areas.

The challenges that are faced by smart grids are wide-ranging as the Smart Grid is broad in its scope, so the potential standards landscape is also very large and complex. The fundamental issue is organization and prioritization to achieve an interoperable and secure Smart Grid.

Since all the parts of this network have organically grown over many years, even decades, figuring out where intelligence needs to be added is very complex. The Smart Grid represents a technical challenge that goes way beyond the simple addition of an Information Technology infrastructure on top of an electro technical infrastructure.

Smart Grid networks have been implemented in china, United Kingdom and United States. The implementation of the smart grids is expensive but once they are implemented they help in effective utilization of resources. The benefits of smart grid networks are Identify and resolve faults on electricity grid, automatically self-heal the grid, Monitor power quality and manage voltage, identify devices or subsystems that require maintenance, help consumers optimize their individual electricity consumption (minimize their bills) and enable the use of smart appliances that can be programmed to run on off-peak power.

Smart Grid objectives are driving utilities to increase automation and optimization in their electric power system operations. Each new initiative is expanding the technology mix and putting greater demands on the network communications infrastructure. In the smart grid operation and management, reliable and real-time information and communication networks play a very critical role. By integrating the appropriate information and communication technologies (ICT) infrastructure, automated control, sensing and metering technologies, and energy management techniques, the smart grid has emerged as a solution that empowers utilities and consumers to share the responsibilities of operating and managing the power grid more efficiently. These systems are made possible by two-way communication technology and computer processing that has been used for decades in other industries. They are beginning to be used on electricity networks, from the power plants and wind farms all the way to the consumers of electricity in homes and businesses. They offer many benefits to utilities and consumers -- mostly seen in big improvements in energy efficiency on the electricity grid and in the energy users' homes and offices.

Reshma M

II MCA

Christ University

Brain computing

We all know that systems are controlled by using various peripheral devices like keyboard, mouse etc. These devices are operated by humans based on the signals generated by the brain. These signals that are produced by the neurons are being read by the electrodes that are implanted into the brain and they are transformed as digital signals into the corresponding system. These are mainly used for the patients with paralysis, motor disorders etc.

MINATURE PARALYNE COATED NEURAL INTERFACING

A set of algorithm and a minature device is used to position electrodes in neural tissue to obtain high-quality extracellular recordings. This electrode detects the signals produced by individual neurons, then optimizes the signal quality and maintains the signal over the time. These electrodes are then coated with a paralyne material and make it bio- compatible. The micro-drive offers more advantages over current neural recording devices.

From a normal neural interfacing the micro scale implantable neural interface (MINI) differs in a lot many ways. In the neural interface perspective, the MINI provides a 3-D site layout and a larger design space over which the device can be optimized.

From a packaging and neurosurgical perspective, the MINI is considered to be minimally invasive because in that it accesses the cortex through a quarter sized holes rather than a large craniotomy. Another attribute of MINI is the ability to replace devices, either after completing data collection from one electrode penetration or after any sort of device failure. This is an attractive feature which guarantees the extended lifetime of the implanted device.

Developments keep happening in the field of brain computer interface. The latest improvement that has happened is that Samsung Company is undergoing a research on building a tablet which works on BCI. And also they have BCI devices that are controlled wirelessly by using Wi-Fi and Bluetooth.

Brain interface computing otherwise known as neural interfacing is a direct communication path between the brain and the external device. As we all know brain is made up of various neurons. Every time we think we move, we work, we walk, we sit our neurons are at work. All these works are carried out by the electrical signals that are transferred from one neuron to the other. All these signals generated by the neurons are being read and is fed into the system in the digital format. The research on this have started from 1970.There are 3 types of BCI's

1. Invasive

-Targeted on people with paralysis

-Directly implanted on to the grey matter

-Produces high quality signals

2. Partially Invasive

-BCI device is directly implanted inside the skull

-Produces better resolution signals

3. Non- Invasive

-Its worn above the head

-Easy to wear

-Poor signals

Kamath Aashish

I MCA

Christ University

CROSSWORD PUZZLE

Across:

2] German luxury automobile company.

3] Network structure.

4] French video game developer.

6] Dell's line of gaming laptops & desktops.

8] Image file format.

9] Communication technology similar to Bluetooth. Old phones might have this. New phones definitely won't.

11] IEEE 1394 High Speed Serial Bus.

12] G in GPU.

14] Computer to which all other computers in a network are connected. If this fails, the entire network does.

16] Sony's line of mobile phones.

17] Black hat hacker. A hacker with evil intentions.

19] 16:9 screens generally called...

Down:

1] Pointing device. No, not a pen. Not a mouse either.

2] Form of algebra as well as a data type.

5] Manufacturer of gaming accessories. (Motto: 'For gamers, by gamers').

7] Software development model. Also a cool place to hang out.

10] S in VIRUS. No, not 'Software'.

13] Linux mascot. Also a cute toy.

15] Another word for 'Wireless'.

17] Every phone has this. Well, almost every phone.

18] Algorithm to perform encryption / decryption.

This key is the product of any two large prime numbers. This large number to be factorized is a difficult task for a hacker, so the credit card number and bank information is safe. Quantum computing technology will only continue to improve. At the moment, it is impossible even to predict what technology will win out in the long term. This is still science but it may become technology sooner than we expect. Theory also continues to advance. Various researchers are actively looking for new algorithms and communication protocols to exploit the properties of quantum systems. Quantum computers can also be used to efficiently simulate other quantum systems. Perhaps someday quantum computers will be used to design the next generation of classical computers. The quantum revolution is already started on its way, and the possibilities that lie ahead are limitless.

This key is the product of any two large prime numbers. This large number to be factorized is a difficult task for a hacker, so the credit card number and bank information is safe. Quantum computing technology will only continue to improve. At the moment, it is impossible even to predict what technology will win out in the long term. This is still science but it may become technology sooner than we expect. Theory also continues to advance. Various researchers are actively looking for new algorithms and communication protocols to exploit the properties of quantum systems. Quantum computers can also be used to efficiently simulate other quantum systems. Perhaps someday quantum computers will be used to design the next generation of classical computers. The quantum revolution is already started on its way, and the possibilities that lie ahead are limitless. Enormously large data dump is what the scenario is in the present business intelligence world. A steep filter of fields is required to understand and review the data. NTSYS is a software that is used to discover pattern and structure in a multivariate data. This means that de-normalized data bulk also seems to make sense. A sample of data points suggests that the samples may have come from two or more distinct populations or to estimate a phylogenetic tree using the neighbor-joining or UPGMA methods for constructing Dendrograms, therefore creating an interface for the user to render a high level overview of the data itself. Of equal interest, the discovery that the variation in some subsets of variables are highly inter-correlated.

Enormously large data dump is what the scenario is in the present business intelligence world. A steep filter of fields is required to understand and review the data. NTSYS is a software that is used to discover pattern and structure in a multivariate data. This means that de-normalized data bulk also seems to make sense. A sample of data points suggests that the samples may have come from two or more distinct populations or to estimate a phylogenetic tree using the neighbor-joining or UPGMA methods for constructing Dendrograms, therefore creating an interface for the user to render a high level overview of the data itself. Of equal interest, the discovery that the variation in some subsets of variables are highly inter-correlated.  An example for Anti-Piracy Warning

An example for Anti-Piracy Warning